User Experience Strategy, Research, and Design

Edward Stull helps teams research audiences and create products. This process often involves design research, user experience (UX) strategy, information architecture, product design, and writing. A bit of luck, too.

Case Studies

Case Studies

Selected work covering UX and customer experience (CX) strategy, research, and design services

Book

Book

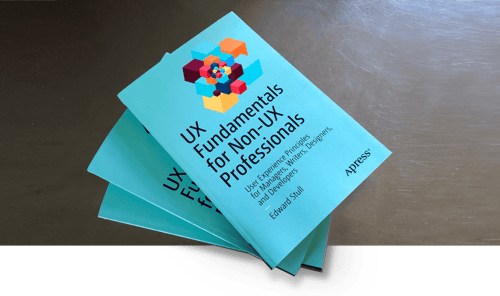

UX Fundamentals for Non-UX Professionals: User Experience Principles for Managers, Writers, Designers, and Developers. Published by Apress Springer Nature.

Contact

Contact

Here is a list of various ways to contact me.

Why reciprocity is vital to any user experience, from gift-giving to applications.

Offsite link • 8 min read

If you were suddenly thrust into the user’s role, would your experience be a good one?

Offsite link • 5 min read

Our brains search for stimuli, be it while riding a train or viewing a mobile app.

Offsite link • 5 min read

When designing experiences, favor what users already know — affordances and signifiers.

Offsite link • 5 min read

Recent Book

UX Fundamentals for Non-UX Professionals

Demystify UX and its rules, contradictions, and dilemmas. This book enables you to participate fully in discussions about UX, as you discover the fundamentals of user experience design and research.

Strategy Services

- Brainstorming and meeting facilitation

- Design-thinking exercises

- Scenario planning

- Jobs-to-be-done (JTBD)

- Business model canvas exercises

- Kano modeling

- Value proposition modeling

Research Services

- Design research

- Ethnographies and contextual inquiries

- Interviews

- Surveying and Top Tasks analysis

- Heuristic audits

- Competitive and comparative audits

- A/B and multivariate testing

- Usability testing

Design and Content Services

- Wireframing

- Rapid prototyping

- Journey and empathy mapping

- Content audits

- Content strategy

- Microcopy and UX writing

- Proposal writing